ChatGPT: A gateway to AI generated unit testing

Philip Abraham

LinkedIn Länk till annan webbplats, öppnas i nytt fönster.

Daniel Fiallos Karlsson

With the new release of ChatGPT, software developer’s curiosity related to how AI can be integrated into the software development process was intensified. This study examines how ChatGPT can be used in a software testing context.

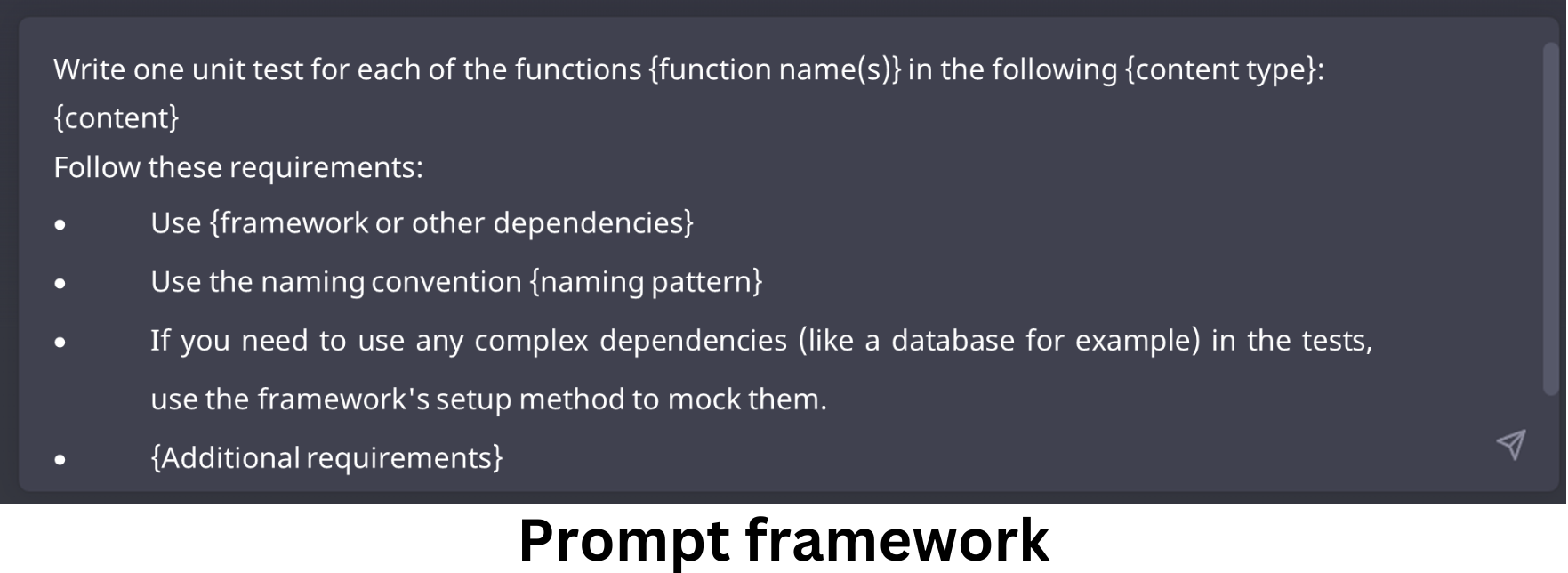

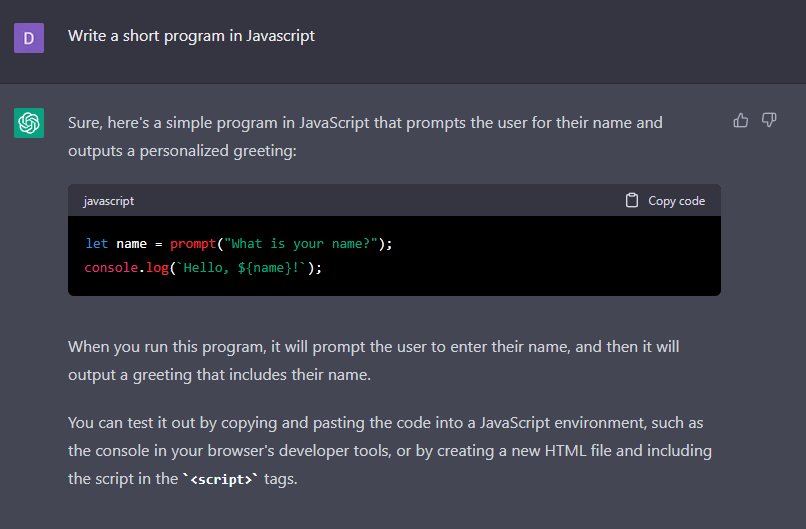

How can ChatGPT help with reducing the time and effort spent on writing unit tests? How would such a prompt be structured? And how would these automatically generated unit tests compare to human written tests? To answer these questions, an experiment was conducted. The experiment encompassed ChatGPT being prompted to generate unit tests for predefined code written in C# or Typescript by Toxic Interactive Solutions AB which was then evaluated and rated. After the generated unit test had been rated, the next steps were determined, and the process was repeated. The results were logged following a diary study. The rating system was constructed with the help of previous research and interviews with software developers working in the industry (also from Toxic) which defined what a high-quality unit test should include. The interviews also helped in understanding ChatGPT’s perceived capabilities.

The experiment showed that ChatGPT can generate unit tests that are of defined quality, though with certain issues. For example, reusing the same prompt multiple times revealed that the consistency in the responses was lacking. Inconsistencies included different testing approaches (how setup methods were used for example), testing areas and sometimes quality. The inconsistencies were reduced by using the deduced prompt framework, but the issue could be a current limitation of ChatGPT which could be handled with a future release.